Prof. TAN Ping Developed SAIL-Recon to Advance Large-Scale 3D Scene Reconstruction

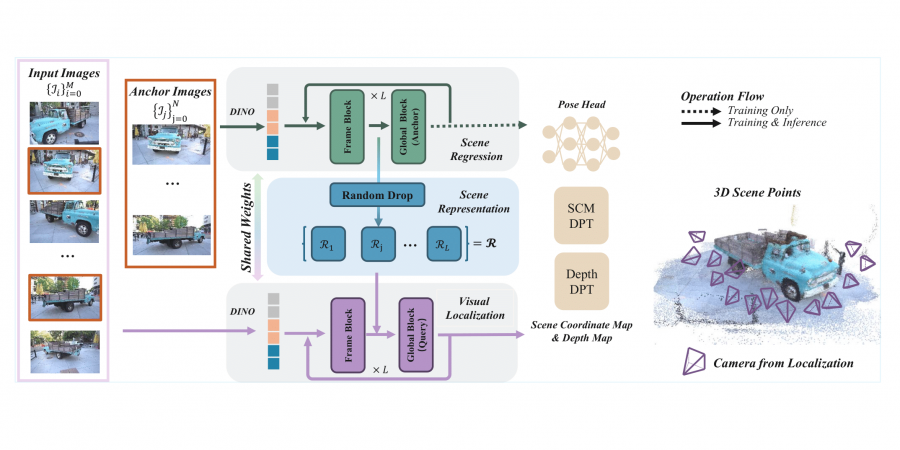

Scene regression methods, such as VGGT (visual geometry grounded transformer), solve the Structure-from-Motion (SfM) problem by directly regressing camera poses and 3D scene structures from input images. They demonstrate impressive performance in handling images under extreme viewpoint changes. However, these methods struggle to handle a large number of input images. To address this problem, a research team led by Prof. TAN Ping has introduced SAIL-Recon, a feed-forward Transformer for large scale SfM, by augmenting the scene regression network with visual localization capabilities. Specifically, the method first computes a neural scene representation from a subset of anchor images. The regression network is then fine-tuned to reconstruct all input images conditioned on this neural scene representation. Comprehensive experiments show that the method not only scales efficiently to large-scale scenes, but also achieves state-of-the-art results on both camera pose estimation and novel view synthesis benchmarks, including TUM-RGBD, CO3Dv2, and Tanks & Temples.

The research was published in a paper title “SAIL-Recon: Large SfM by Augmenting Scene Regression with Localization”. Prof. Tan Ping, Professor of the Department of Electronic and Computer Engineering, Associate Director of HKUST Von Neumann Institute, and Director of HKUST-BYD Embodied AI Joint Laboratory, is one of the corresponding authors. His PhD students, DENG Junyuan and LI Heng, are co-first authors. Other collaborators are from AI chips company Horizon Robotics and Zhejiang University.