HKUST President’s Cup Champion Became Winner of Global Engineering Presentation Competition

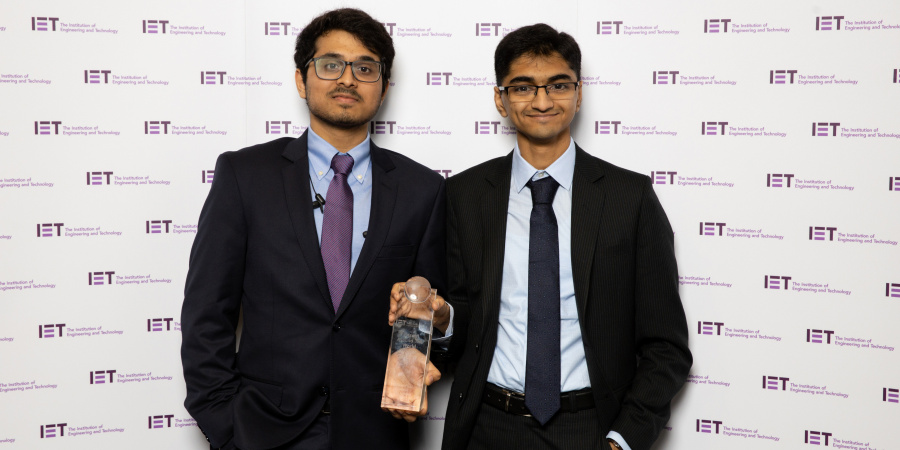

Third-year Computer Science undergraduate Padmanabhan KRISHNAMURTHY (Paddy) was named a winner of the Institution of Engineering and Technology (IET)’s Present Around The World (PATW) Competition, a global contest for young professionals and students within engineering to develop and showcase their presentation skills.

Paddy was one of the five finalists who made it to the global final held in London on October 24, 2019. Representing the Asia Pacific region, he was competing with finalists from Americas; Europe, Middle East and Africa; South Asia; and UK.

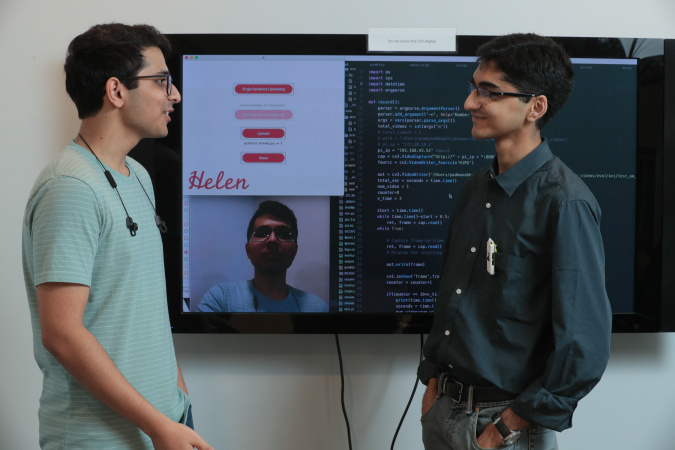

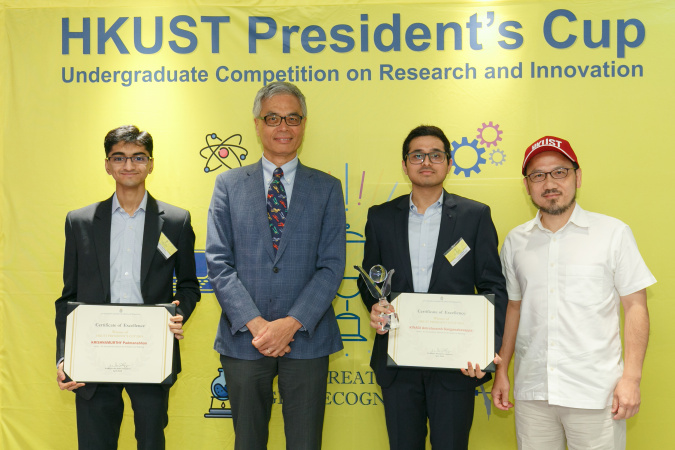

At the competition, he gave an engaging presentation of “Helen”, an accessibility device for AI-based lip reading that he co-created with his teammate Amrutavarsh Sanganabasappa KINAGI (Year 3, BSc in Computer Science and Mathematics) under the supervision of their advisor Prof. Brian MAK. The innovative project was the winner of the HKUST President’s Cup 2019, a university-wide competition that recognizes undergraduates for outstanding achievements in research and innovation.

A compact wearable camera that performs automated lip reading using deep learning, Helen takes in only visual images of a speaker, and outputs a transcription of the spoken content. It is named after Helen KELLER, the renowned American author and educator who overcame the adversity of being blind and deaf to become one of the 20th century’s leading humanitarians.

People with hearing impairment face a lot of difficulties in communicating. One common solution is to use hearing aids but they only use audio and do not work well in noisy surroundings. Helen opens up an entirely new visual dimension for the hearing impaired to receive information as it uses visual information to capture speech instead of relying on audio. It utilizes a camera to stream video of a speaker to a remote device that runs LipNet, a model that maps a variable-length sequence of video frames to text co-developed by the University of Oxford, DeepMind, and CIFAR.

Helen was the champion of the undergraduate section and received the Best Innovation Award in IET’s Young Professionals Exhibition & Competition (YPEC) 2019 in Hong Kong in July, enabling the two students to advance to the global final. The project was also a runner-up of the James Dyson Award 2019 in Hong Kong region.

The pair see each other a great teammate and feel fulfilled to have built Helen, a meaningful device that helps the disabled to communicate more easily and is recognized by international organizations. They were grateful for the support, guidance and resources that HKUST and Prof. Mak provided. “Without them, there would be no Helen and we would not be able to achieve so much,” they said.

Related links: