HKUST Engineering Students Develop Revolutionary Mobile App for Learning Sign Language

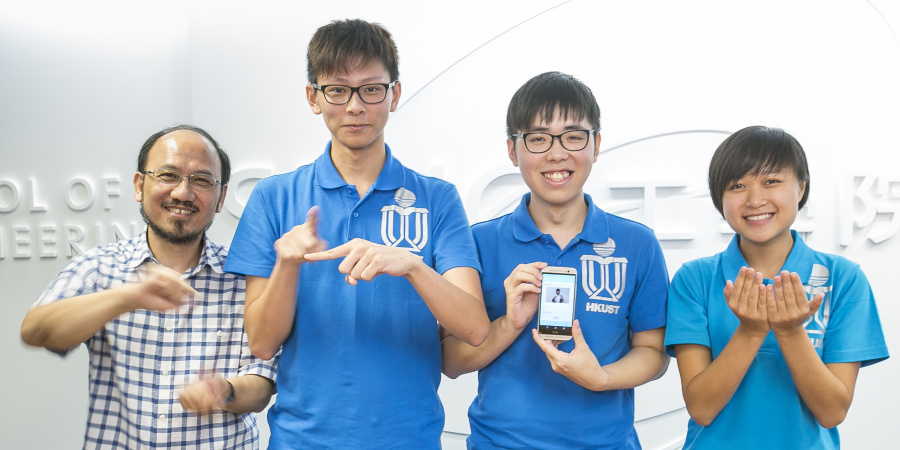

Three fresh graduates of Computer Science and Engineering at the School of Engineering, The Hong Kong University of Science and Technology (HKUST) have developed a mobile app that can translate Chinese sentences into sign language.

This app is revolutionary in the sense that it can immediately translate a Chinese sentence into a sign language sentence while traditional sign language translation apps typically can only translate individual words or terms.

The app brings the communication with the hearing-impaired people to a new level, and particularly benefits sign language learners who need to familiarize themselves with sign language syntax.

Prof Brian Mak, Associate Professor in the Department of Computer Science and Engineering at HKUST, said, “HKUST is keen on giving back to society, especially to the underprivileged people, including people with hearing challenges. This project is a good example of our passion to serve.

“A recent survey indicates that there are in Hong Kong about 50,000 people who are either totally deaf or are hearing-impaired, but the number of professional sign language interpreters is low – only about 54 in total. Hence there is a great need for people who can do sign language interpretation, and this new app will definitely contribute to the training of these interpreters, and to the communication between the general public and people with hearing challenges.”

The development of this app was the final year project for the three students – Ken Ka-wai Lai, Mary Ming-fong Leung, and Kelvin Wai-chiu Yung.

The team first investigated the various apps currently available for sign language interpretation, and how they can be improved.

Ken Lai said, “One of the major challenges in developing the app is that the sentence structure of sign language is somewhat different from that of Chinese language. In sign language, adjectives, adverbs, numbers and question words are normally placed after the noun, which is not the case in Chinese.”

Kelvin Yung added, “Our challenge therefore is to design a new sentence segmenting algorithm that is in line with sign language usage. On this basis, we make use of the FFmpeg software to carry out video synthesis – putting the various signs performed on video together to form a complete sentence.”

Mary Leung, who has a good command of sign language, was naturally the ‘model’ for the sign language video clips. “It was a very memorable experience for me – I recorded over 1,700 words and expressions in sign language over a span of half a year. For the best result, I had to wear the same hairstyle and clothing during the entire period. Despite this constraint, the result is tremendously rewarding for me.”

Looking ahead, the team identified three major areas for further refinement. Ken said, “First, we think machine learning can be brought in to improve text segmentation, to incorporate a large amount of data on sign language grammar, and to develop a more powerful model than the current one. Second, we think the image processing can be improved for smoother transition between words. Finally, we think in the long run it is better to use computer graphics than real people for greater consistency of the images.”