SENG News

Research Excellence and Real-World Impact in Sustainable Urban Drainage Management

Innovations in Software-Hardware Co-Design

Cutting Costs and Greenhouse Gas Emissions by 11% and 47%

Achieving 20‑Fold Efficiency Increase and Significant Cost Reduction of 50%

Set to Begin in 2026/27 Academic Year

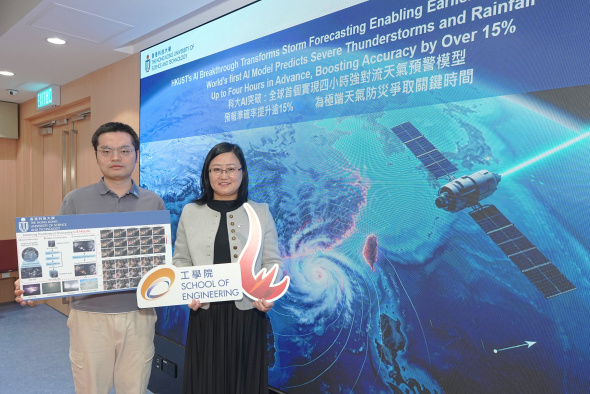

World’s First AI Model Predicts Severe Thunderstorms and Rainfall Up to Four Hours in Advance, Boosting Accuracy by Over 15%

Strengthening Talent Development and Research Innovation

Opening Ceremony and Poon Choi Party Drew 300+ Alumni and Friends